NYPL Labs

Scribe: Toward a General Framework for Community Transcription

A couple of weeks ago, NYPL Labs was very excited to release Emigrant City, the Library's latest effort to unlock the data found within our collections.

But Emigrant City is a bit different from the other projects we’ve released in one very important way: this one is built on top of a totally new framework called Scribe, built in collaboration with Zooniverse and funded by a grant from the NEH Office of Digital Humanities along with funds from the University of Minnesota. Scribe is the codebase working behind the scenes to support this project.

What is Scribe?

Scribe is a highly configurable, open-source framework for setting up community transcription projects around handwritten or OCR-resistant texts. Scribe provides the foundation of code for a developer to configure and launch a project far more easily than if starting from scratch.

NYPL Labs R&D has built a few community transcription apps over the years. In general, these applications are custom built to suit the material. But Scribe prototypes a way to describe the essential work happening at the center of those projects. With Scribe, we propose a rough grammar for describing materials, workflows, tasks, and consensus. It’s not our last word on the topic, but we think it’s a fine first pass proposal for supporting the fundamental work shared by many community transcription projects.

So, what’s happening in all of these projects?

Our previous community transcription projects run the gamut from requesting very simple, nearly binary input like “Is this a valid polygon?” (as in the case of Building Inspector) to more complex prompts like “Identify every production staff member in this multi-page playbill” (as in the case of Ensemble). Common tasks include:

- Identify a point/region in a digitized document or image

- Answer a question about all or part of an image

- Flag an image as invalid (meaning it’s blank or does not include any pertinent information)

- Flag other’s contributions as valid/invalid

- Flag a page or group of pages as “done”

There are many more project-specific concerns, but we think the features above form the core work. How does Scribe approach the problem?

Scribe reduces the problem space to “subjects” and “classifications.” In Scribe, everything is either a subject or a classification: Subjects are the things to be acted upon, classifications are created when you act. Creating a classification has the potential to generate a new subject, which in turn can be classified, which in turn may generate a subject, and so on.

This simplification allows us to reduce complex document transcription to a series of smaller decisions that can be tackled individually. We think reducing the atomicity of tasks makes projects less daunting for volunteers to begin and easier to continue. This simplification doesn’t come at the expense of quality, however, as projects can be configured to require multiple rounds of review.

The final subjects produced by this chain of workflows represent the work of several people carrying an initial identification all the way through to final vetted data. The journey comprises a chain of subjects linked by classifications connected by project-specific rules governing exposure and consensus. Every region annotated is eventually either deleted by consensus or further annotated with data entered by several hands and, potentially, approved by several reviewers. The final subjects that emerge represent singular assertions about the data contained in a document validated by between three and 25 people.

In the case of Emigrant City specifically, individual bond records are represented as subjects. When participants mark those records up, they produce “mark” subjects, which appear in Transcribe. In the Transcribe workflow, other contributors transcribe the text they see, which are combined with others’ transcriptions as “transcribe” subjects. If there’s any disagreement among the transcriptions, those transcribe subjects appear in Verify where additional classifications are added by other contributors as votes for one or another transcription. But this is just the configuration that made sense for Emigrant City. Scribe lays the groundwork to support other configurations.

Is it working?

I sure hope so! In any case, the classifications are mounting for Emigrant City. At writing we’ve gathered 227,638 classifications comprising marks, transcriptions, and verifications from nearly 3,000 contributors. That’s about 76 classifications each, on average, which is certainly encouraging as we assess the stickiness of the interface.

We’ve had to adjust a few things here and there. Bugs have surfaced that weren’t apparent before testing at scale. Most issues have been patched and data seems to be flowing in the right directions from one workflow to the next. We’ve already collected complete, verified data for several documents.

Reviewing each of these documents, I’ve been heartened by the willingness of a dozen strangers spread between the US, Europe, and Australia to meditate on some scribbles in a 120 year old mortgage record. I see them plugging away when I’m up at 2 a.m. looking for a safe time to deploy fixes.

What’s next?

As touched on above, Scribe is primarily a prototype of a grammar for describing community transcription projects in general. The concepts underlying Scribe formed over a several-month collaboration between remote teams. We built the things we needed as we needed them. The codebase is thus a little confusing in areas, reflecting several mental right turns when we found the way forward required an additional configuration item or chain of communication. So one thing I’d like to tackle is reigning in some of the areas that have drifted from the initial elegance of the model. The notion that subjects and workflows could be rearranged and chained in any configuration has been a driving idea, but in practice the system obliges only a few arrangements.

An increasingly more pressing desire, however, is developing an interface to explore and vet the data assembled by the system. We spent a lot of time developing the parts that gather data, but perhaps not enough on interfaces to analyze it. Because we’ve reduced document transcription into several disconnected tasks, the process to reassemble the resultant data into a single cohesive whole is complicated. That complexity requires a sophisticated interface to understand how we arrived at a document’s final set of assertions from the the chain of contributions that produced it. Luckily we now have a lot of contributions around which to build that interface.

Most importantly, the code is now out in the wild, along with live projects that rely on it. We’re already grateful for the tens of thousands of contributions people have made on the transcription and verification front, and we’d likewise be immensely grateful for any thoughts or comments on the framework itself—let us know in the comments, or directly via Github, and thanks for helping us get this far.

Also, check out the other community transcription efforts built on Scribe. Measuring the Anzacs collects first-hand accounts from New Zealanders in WWI. Coming soon, “Old Weather: Whaling” gathers Arctic ships’ logs from the late 19th and early 20th centuries.

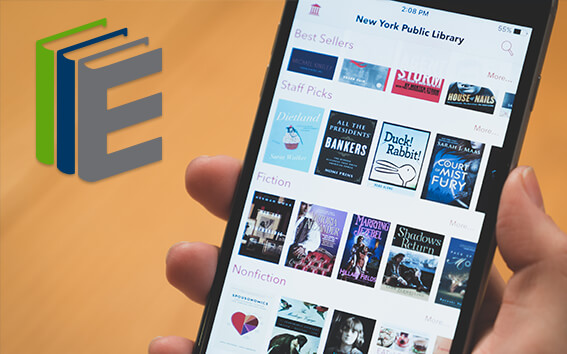

Read E-Books with SimplyE

With your library card, it's easier than ever to choose from more than 300,000 e-books on SimplyE, The New York Public Library's free e-reader app. Gain access to digital resources for all ages, including e-books, audiobooks, databases, and more.

With your library card, it's easier than ever to choose from more than 300,000 e-books on SimplyE, The New York Public Library's free e-reader app. Gain access to digital resources for all ages, including e-books, audiobooks, databases, and more.

If you don’t have an NYPL library card, New York State residents can apply for a digital card online or through SimplyE (available on the App Store or Google Play).

Need more help? Read our guide to using SimplyE.